If you follow AI news, even casually, you’re accustomed to having your mind blown at fairly regular intervals. This week, however, I witnessed something more unsettling than corporate boards playing Game of Thrones with technology that could destroy humanity, and it came from a brief demo in a YouTube video on Mathew Berman’s channel (If you’re unfamiliar with his excellent content, you can find it here).

The demo was of Otherside AI’s new code called Self Operating Computer https://github.com/OthersideAI and a few days after watching it I’m still shuffling around murmuring to myself and thinking about it. Self Operating Computer is described by its creators as “A framework to enable multimodal models to operate a computer” and, based on Matt’s demo, this is exactly what it does. The install was quick and easy and the prompt was straightforward. It appears to work by taking screenshots of your screen and using that context (with API integration to an LLM such as GPT4v) to operate your mouse and keyboard.

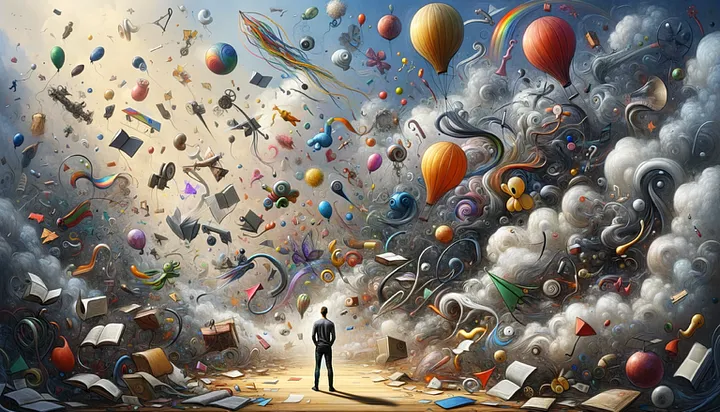

And, for me, that is the astounding part. Having a system that can actually operate your PC/Mac the same way a human would opens up possibilities that I won’t embarrass myself to try to list except to say that accessibility innovations, improved learning techniques, and business automation feel like obvious places to start. Oh, and yeah, I suppose you could use it to move your mouse to keep your Teams/Slack status green as well.

Before we get to the terrifying part, if you’re unfamiliar with Open AI’s GPT Vision, it is essentially a method of using images as a way to communicate with an LLM. Generating analysis from an image of a chart, transcribing hand written notes, and creating an application from a sketch are all ideas that are being explored by GPT4 users already. It’s really quite amazing in terms of its ability to decipher things in images that humans can and cannot see. Several great examples of this can be found in this video by Andrej Karpathy (which you can find here) . You can skip to the 50:30 mark of the video to see the examples, but watching the whole thing is definitely worth your time regardless of how much, or little, you know about AI/LLMs.

Back to the point, what I find unsettling, and just a little terrifying, is the kind of information that is sent to the LLM in order to provide enough context for the LLM to know how to control the host. The installation and running of code on a host by a would be attacker is complex, but the market is full of techniques to do that already (think RDP, TeamViewer, etc. not to mention the ones that uhhh, are ‘commercially available’). The challenge in today’s security market isn’t to stop every attack, which is impossible, but to make it as hard as possible for attackers to successfully attack yournetwork/host/server/account. Sending pictures of your desktop to an LLM reminds me of the days when hackers would post over-the-shoulder pictures, mostly in airports, of people’s laptop screens and laughing about the details they were able to glean from them.

If you’re trying to keep your laptop secure, sharing information such as this makes it just too easy for someone looking to make your life miserable:

- Operating System

- VPN Client

- Installed software

- Installed corporate software (think M365, G-Suite, etc.)

- Your role in the company (got Terminal open? — you’re likely not an executive / CRM open? You’re in sales, etc.)

- Do you update your software? Has Chrome been patched? (That red “Update” button tells a story)

- Phishing fodder (Do you shop on Amazon? Is that a backpack I see you’re researching? Are you expecting a FedEx package?)

This notion of using images (or video) from your laptop is coming at us fast; there are commercially-available products doing exciting things in this space such as Rewind https://www.rewind.ai and Backtrack https://usebacktrack.com and I’m certain there will be more soon.

..but back to Mathew Berman’s video. You can hear the fear in his voice as he hands complete control of his laptop to ‘something else’ and I think that fear is justified. This technology could definitely help people that need it…It’s just that I’ve got questions about the information in the prompt that is sent to the LLM and I can’t shake the feeling that ‘unintended consequences’ might be a common phrase in the coming weeks and months.